In the popular imagination, the inner workings of artificial intelligence are often shrouded in mystery, leading to a range of analogies, from a futuristic brain to a digital genie. One of the more popular and scientifically grounded analogies is that AI, particularly a large language model, is simply a “word calculator.” While this analogy is technically correct—at its core, AI operates on mathematical principles—it is also profoundly misleading. It fails to capture the immense complexity, the emergent properties, and the a-human nature of these systems. To truly understand the power and the limitations of AI, we must move beyond the simplistic analogy and delve into the fascinating reality of a technology that is a mathematical marvel but not a conscious one, a system that can process words with stunning accuracy but lacks a single shred of human understanding.

Beyond the Analogy: The Mathematical Foundation of Language

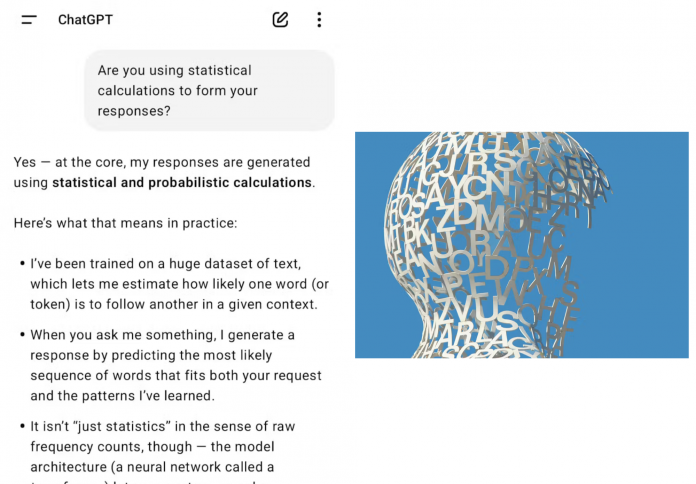

The “word calculator” analogy gets one thing fundamentally right: at its core, a large language model (LLM) is an astonishingly complex mathematical machine. When it “reads” a text, it does not understand the meaning of the words in a human sense. Instead, it converts each word into a numerical representation, a “vector” in a high-dimensional space. These vectors are then processed through a complex series of mathematical calculations that are designed to predict the next word in a sequence.

The entire process is based on statistical patterns. The model has ingested trillions of words from the internet, and it has learned to identify the probability of a word appearing next to another. It has learned that the word “cat” is more likely to be followed by “sat” than by “satirical.” The AI, in a sense, is a master of statistical inference, a machine that can calculate the most likely word in a sequence with stunning accuracy. This is the scientific basis for the “word calculator” analogy, and it is a powerful reminder that beneath the human-like facade of an AI is a complex and elegant mathematical machine.

The Illusion of Understanding

The most profound flaw in the “word calculator” analogy is its inability to account for the illusion of understanding that AI creates. While an AI can generate a coherent and even creative text, it doesn’t “understand” the words in the human sense. It lacks consciousness, intent, and lived experience. It cannot feel emotion, it cannot empathize, and it cannot reason from first principles. It is a powerful pattern-matching engine that can generate a convincing text, but it is not a mind.

This illusion of understanding is what makes AI so powerful and so dangerous. A person can ask an AI chatbot for medical advice, and the AI will generate a response that is coherent and convincing. But the AI has no actual knowledge of medicine, no understanding of the nuances of a human body. It is simply generating a response based on the statistical patterns of the medical texts it was trained on. This is a powerful reminder that while AI can provide a quick and easy answer, it cannot provide the human judgment, the lived experience, and the understanding that are essential for true wisdom.

The Unpredictable: Emergent Properties and the Unknown

The “word calculator” analogy also fails to capture the more mysterious side of LLMs: their “emergent properties.” These are capabilities that appear in a model as it scales, without being explicitly programmed. For example, a model trained on a massive amount of data may suddenly develop the ability to translate between languages, even if it was never explicitly trained to do so. This unpredictability is both a source of the AI’s power and a source of concern for researchers. It makes the “word calculator” analogy feel insufficient, as it doesn’t account for these seemingly magical abilities.

The emergent properties of AI suggest that there is more to these systems than just simple pattern-matching. They suggest that there is a kind of “intelligence” that is not just a reflection of the training data but a new and unpredictable force. This is why the conversation around AI has become so complex and so urgent. We are not just dealing with a simple tool; we are dealing with a new kind of technology that is capable of surprising us in ways we never imagined.

The Path Forward: A New Vocabulary for AI

The time has come to move beyond the simplistic analogies and use a new vocabulary for discussing AI. We should stop thinking of AI as a “mind” or a “calculator” and instead think of it as a new kind of “cognitive tool” that can augment human intelligence. This new vocabulary would more accurately reflect the technology’s capabilities and its limitations. It would allow us to have a more nuanced and more productive conversation about the future of AI.

The future of AI is not one where humans and machines are at odds. It is one where they are collaborators, working together to create a new kind of intelligence. By embracing a new vocabulary and a new way of thinking about AI, we can ensure that this powerful technology is used to build a more just, more equitable, and more humane world. The AI revolution is a powerful force for change, and it is our responsibility to ensure that it is one that benefits everyone.